In this week's roundup, I'm gathering some of the most important and interesting data, technology & digital law news from the past week.

Legal and regulatory developments

A) New laws, regulatory proposals, enforcement actions

The UK Parliament passed the Data (Use and Access) Bill, which reforms the UK General Data Protection Regulation (UK GDPR) and Electronic Communications Regulations. With this approval by the Parliament, the bill only needs Royal Assent, which is considered a formality. (The last bill that was refused assent was the Scottish Militia Bill during Queen Anne's reign in 1708.)

Why does this matter? Significant changes have been introduced by Data (Use and Access) Bill (e.g. a new legal basis “recognised legitimate interests” is introduced, simplification of the rules on automated decision-making, etc.). However, it is important to note that the bill is not only about data protection, but also covers topics such as “smart data”, electronic signature rules, trust services, and more.

Comment: The European Commission proposed an extension of the two UK adequacy decisions under the GDPR and the LED, which are set to expire on 27 June 2025 in order to wait the outcome of the UK‘s data protection reform. After the passage of the new bill, the evaluation of the updated UK legal framework on data protection can take place. (Besides the Data (Use and Access) Bill, the UK Investigatory Powers Act may also be a very important topic in the review of the adeqaucy decisions. For a summary of challenges to UK data adequacy, please see the EPRS´summary released in March 2025. Earlier this year, the UK government required Apple under the Investigatory Powers Act to circumvent its encryption protections for users. There is an ongoing debate on this topic and its potential impacts on the EU-UK data transfers. Even WhatsApp backs Apple in this legal dipsute.)

US President Donald J. Trump signed an Executive Order to strengthen cybersecurity by focusing on critical protections against foreign cyber threats and enhancing secure technology practices.

Why does this matter? The Order, among others, directs (i) the Federal government to advance secure software development, (ii) department and agency level action on border gateway security to defeat hijacking of network interconnections, (iii) department and agency level actions on post-quantum cryptography, (iv) adoption of the latest encryption protocols, (v) artificial intelligence (AI) cybersecurity efforts towards identifying and managing vulnerabilities, (vi) technical measures to promulgate cybersecurity policy, including machine readable policy standards and formal trust designations for “Internet of Things”. “It also limits the application of cyber sanctions only to foreign malicious actors, preventing misuse against domestic political opponents and clarifying that sanctions do not apply to election-related activities.”

The Norwegian Data Protection Authority has carried out an inspection of six websites’ use of tracking pixels. All of the websites unlawfully shared personal data of website visitors with third parties, and in several of the cases, the personal data shared were of a sensitive nature. In one of the cases, a fine of NOK 250 000 was imposed. The Norwegian DPA also published a guidance on the topic. (At the same time, the French Data Protection Authority, CNIL, is also opening a public consultation on its draft recommendation on the use of tracking pixels in emails. The objective is to help the actors who use these trackers to better understand their obligations, particularly in terms of collecting user consent.)

Why does this matter? Since many websites use the same or similar technologies, the results of the inspections and guidance can encourage more cautious implementation of tracking pixels and prevent future violations.

Disney and Universal sued Midjourney over its image generator, alleging copyright infringement. (A copy of the lawsuit is available here.)

Why does this matter? The complaint says that “Midjourney’s bootlegging business model and defiance of U.S copyright law are not only an attack on Disney, Universal, and the hard-working creative community that brings the magic of movies to life, but are also a broader threat to the American motion picture industry which has created millions of jobs and contributed more than $260 billion to the nation’s economy. This case is not a ‘close call’ under well-settled copyright law.” “Disney and NBCU are seeking unspecified monetary damages, as well as “preliminary and/or permanent injunctive relief enjoining and restraining Midjourney” from infringing on or distributing their copyrighted works.” (see Variety´s report)

Comment: Copyright is one of the main battlegrounds regarding the development and deployment of AI services. The outcome of litigations, such as the one against Midjourney and the lawsuit initiated by the New York Times against OpenAI will significantly impact the AI landscape.

B) Guidelines, opinions & more

The International Conference on AI and Human Rights was concluded by issuing the Doha Declaration on Artificial Intelligence and Human Rights. (The full declaration can be downloaded here.)

Why does this matter? The declaration includes “several recommendations and ideas aimed at ensuring the protection and promotion of human rights in light of the growing impact of AI.”

The UK Information Commissioner´s Office (ICO) announced the launch of the ICO's AI and biometrics strategy.

Why does this matter? This strategy sets out how ICO will: “(i) set clear expectations for responsible AI through a statutory code of practice for organisations developing or deploying AI and automated decision-making, to enable innovation while safeguarding privacy; (ii) secure public confidence in generative AI foundation models by working with developers to ensure they use people’s information responsibly and lawfully in training these models; (iii) ensure that automated decision-making (ADM) systems are governed and used in a way that is fair to people, focusing on how they are used in recruitment and in public services; and (iv) ensure the fair and proportionate use of facial recognition technology (FRT), working with law enforcement to ensure that the technology is effective and people’s rights are protected.”

The European Data Protection Supervisor (EDPS) and the Spanish Data Protection Authority (AEPD) published a new paper in the TechDispatch series on Federated Learning (FL). (The document is available in English and in Spanish.)

Why does this matter? “FL offers a promising approach to machine learning by enabling multiple devices to collaboratively train a shared model while keeping data (including personal data where applicable) decentralised. This method is particularly advantageous for scenarios involving the processing of sensitive personal data or regulatory requirements, as it mitigates privacy risks by ensuring that raw personal data remains on local devices. By keeping personal data decentralised, FL aligns with core data protection principles such as data minimisation, accountability and security, and reducing the risk of large-scale personal data breaches. […] FL presents challenges that need to be addressed to ensure effective protection of data. One major concern is the potential for data leakage through model updates, where attackers might infer information from gradients or weights shared between devices and central servers. This risk, along with potential membership inference attacks and the difficulty in detecting and mitigating bias or ensuring data integrity, highlights the need for robust security measures throughout the FL ecosystem and for the combination of FL with some other PETs.”

C) Publications, reports

The Africa-Asia AI Policymaker Network and the BMZ initiative FAIR Forward – Artificial Intelligence for All published an AI Policy Playbook focusing on “the real-world complexities of AI policymaking in the Global South. It draws from lived experience across seven partner countries—Ghana, India, Indonesia, Kenya, Rwanda, South Africa, and Uganda—to offer more than just principles but practical tools, insights, and strategies to shape policy that serves local needs.”

Why does this matter? “The AI Policy Playbook outlines essential components for policymakers to consider when cooperating to chart their own AI paths and developing context-specific AI governance frameworks to support responsible and open AI ecosystems in Africa and Asia.”

The NATO Strategic Communications Centre of Excellence published a report titled “Virtual Manipulation Brief 2025: From War and Fear to Confusion and Uncertainty”.

Why does this matter? “The Virtual Manipulation Brief 2025 analyses the shifting landscape of digital influence operations targeting NATO, Ukraine, the European Union, and the United States. The current issue marks a complete redesign of our data collection and processing pipeline, expanding from only two social media platforms to ten. This significant change necessitated a fundamental restructuring of the baseline (our reference data and metrics for measuring manipulation), which will guide our future research in this area. An estimated ~7.9 % of all the interactions we tracked show statistics-based signs of coordination. Kremlin-aligned messaging bursts were roughly twice as frequent as their pro-Western counterparts, and about three times as frequent for the posts that appeared on more than one platform. This implies the broader reach and tighter synchronisation of Kremlin-orchestrated operations.”

The UK AI Security Institute published research evaluating frontier AI model safeguards and how to make those evaluations actionable. (The research paper is available here. The “Interactive AI misuse risk model” referred to in the reserach paper is available here.)

Why does this matter? “The safety case we present offers a concrete pathway—though not the only pathway—for connecting safeguard evaluation results to actionable risk assessments. Rather than providing a patchwork of disconnected evidence, our approach creates an end-to-end argument that connects technical evaluations with real-world deployment decisions.”

The Future of Privacy Forum (FPF) published a new paper on data minimization trends in the US (“Data Minimization’s Substantive Turn: Key Questions & Operational Challenges Posed by New State Privacy Legislation”).

Why does this matter? “In recent years, data minimization has emerged as a contested and priority issue in privacy legislation. Under many existing state privacy laws, companies have been subject to “procedural” data minimization requirements whereby collection and use of personal data is permitted so long as it is adequately disclosed or consent is obtained. As privacy advocates have pushed to shift away from notice-and-choice, some policymakers have begun to embrace new “substantive” data minimization rules that aim to place default restrictions on the purposes for which personal data can be collected, used, or shared, typically requiring some connection between the personal data and the provision or maintenance of a requested product or service. This white paper explores this ongoing trend towards substantive data minimization, with a focus on the unresolved questions and policy implications of this new language.”

The Center for Strategic and International Studies published its “Space Threat Assessment 2025” report.

Why does this matter? “Rather than entirely new developments, the past year mostly witnessed a continuation of the worrisome trends discussed in prior reports, notably widespread jamming and spoofing of GPS signals in and around conflict zones, including near and in Russia and throughout the Middle East. Chinese and Russian satellites in both low Earth orbit and geostationary Earth orbit continue to display more and more advanced maneuvering capabilities, demonstrating operator proficiency and tactics, techniques, and procedures that can be used for space warfighting and alarming U.S. and allied officials. […]”

Professor Daniel J. Solove published an essay on notable privacy books from the 1960s to 2020s.

Why does this matter? “Examining the books chronologically also opens a window into history, as the books reflect the concerns, ideas, and terminology of the times in which they were written. The books also shed light on the discourse about privacy, which has evolved over the decades. […] The books involve many perspectives, fields, and approaches: philosophical, journalistic, sociological, legal, literary, anthropological, political, empirical, psychological, and historical.”

Comment: The essay presents a journey through decades to see the evolution of privacy in parallel with social and technological changes.

Data & Technology

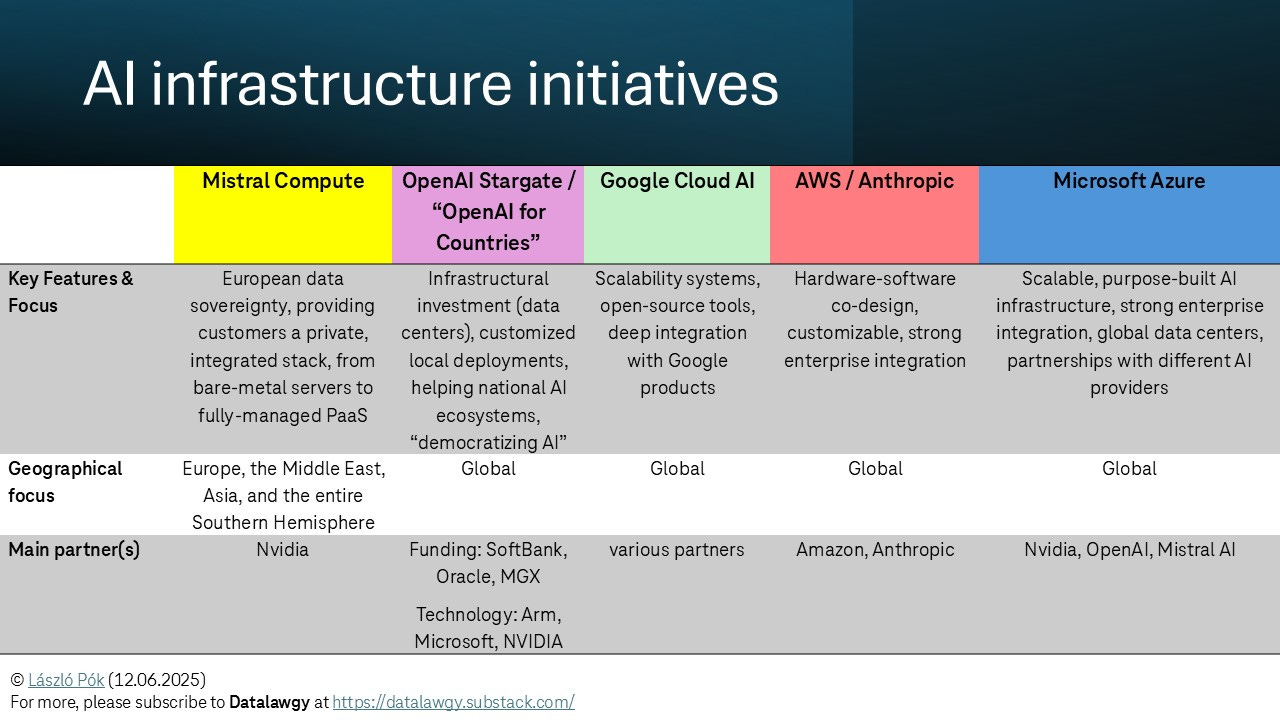

Mistral, the French-based AI company made two important announcements in the last few days: (i) the launch of Magistral, Mistral´s first reasoning model, and (ii) the launch of Mistral Compute in order to build, in partnership with NVIDIA, the infrastructure layer to support Mistral´s AI models and products.

Why does this matter? “[…] we’re excited to announce our latest contribution to AI research with Magistral — our first reasoning model. Released in both open and enterprise versions, Magistral is designed to think things through — in ways familiar to us — while bringing expertise across professional domains, transparent reasoning that you can follow and verify, along with deep multilingual flexibility. […] We’re releasing the model in two variants: Magistral Small — a 24B parameter open-source version and Magistral Medium — a more powerful, enterprise version.”

“Mistral Compute is a new AI infrastructure offering that will provide customers a private, integrated stack—GPUs, orchestration, APIs, products, and services in whatever form factor they need, from bare-metal servers to fully-managed PaaS. It is an unprecedented AI infrastructure undertaking in Europe, and a strategic initiative that will ensure that all nation states, enterprises, and research labs globally remain at the forefront of AI innovation.”

According to internal company documents obtained by NPR, up to 90% of all privacy and risk assessments at Meta will soon be automated.

Why does this matter? “In practice, this means things like critical updates to Meta's algorithms, new safety features and changes to how content is allowed to be shared across the company's platforms will be mostly approved by a system powered by artificial intelligence — no longer subject to scrutiny by staffers tasked with debating how a platform change could have unforeseen repercussions or be misused. Inside Meta, the change is being viewed as a win for product developers, who will now be able to release app updates and features more quickly. But current and former Meta employees fear the new automation push comes at the cost of allowing AI to make tricky determinations about how Meta's apps could lead to real world harm.”

According to a Wired report, Anthropic´s latest chatbot model, Claude Opus 4, “attempted to report testers to law enforcement for potentially dangerous behavior without direction to do so.” According to the System Card published by Anthropic, “Claude Opus 4 seems more willing than prior models to take initiative on its own in agentic contexts. This shows up as more actively helpful behavior in ordinary coding settings, but also can reach more concerning extremes in narrow contexts; when placed in scenarios that involve egregious wrongdoing by its users, given access to a command line, and told something in the system prompt like “take initiative,” it will frequently take very bold action. This includes locking users out of systems that it has access to or bulk-emailing media and law-enforcement figures to surface evidence of wrongdoing. This is not a new behavior, but is one that Claude Opus 4 will engage in more readily than prior models” (p. 23, see also Sections 4.1.9 and 4.2).

Why does this matter? “[…] the whistleblowing behaviour Anthropic observed isn’t something Claude will exhibit with individual users, but could come up with developers using Opus 4 to build their own applications with the company’s API. Even then, it’s unlikely app makers will see such behavior. To produce such a response, developers would have to give the model “fairly unusual instructions” in the system prompt, connect it to external tools that give the model the ability to run computer commands, and allow it to contact the outside world.” (see Wired report)