In this week's roundup, I'm gathering some of the most important and interesting data, technology & digital law news from the past week.

Legal and regulatory developments

A) New laws, regulatory proposals, enforcement actions

The White House published an AI Action Plan for the USA.

Why does this matter? “The United States is in a race to achieve global dominance in artificial intelligence (AI). Whoever has the largest AI ecosystem will set global AI standards and reap broad economic and military benefits. Just like we won the space race, it is imperative that the United States and its allies win this race. […] An industrial revolution, an information revolution, and a renaissance—all at once. This is the potential that AI presents. The opportunity that stands before us is both inspiring and humbling. And it is ours to seize, or to lose. […] America’s AI Action Plan has three pillars: innovation, infrastructure, and international diplomacy and security. […] This Action Plan sets forth clear policy goals for near-term execution by the Federal government. The Action Plan’s objective is to articulate policy recommendations that this Administration can deliver for the American people to achieve the President’s vision of global AI dominance. The AI race is America’s to win, and this Action Plan is our roadmap to victory.”

China also published a Global AI Governance Action Plan. (Full text of this action plan is available here in English .)

Why does this matter? “`The two camps are now being formed,` said George Chen, partner at the Asia Group and co-chair of the digital practice. `China clearly wants to stick to the multilateral approach while the U.S. wants to build its own camp, very much targeting the rise of China in the field of AI,` Chen said.”

The European Commission preliminarily finds Temu in breach of the Digital Services Act (DSA) in relation to illegal products on its platform.

Why does this matter? “Evidence showed that there is a high risk for consumers in the EU to encounter illegal products on the platform. Specifically, the analysis of a mystery shopping exercise conducted by the Commission found that consumers shopping on Temu are very likely to find non-compliant products among the offer, such as baby toys and small electronics. […] The preliminary findings sent today by the Commission are without prejudice to the final outcome of the investigation, as Temu now has the possibility to exercise its rights of defence by examining the Commission's investigation file and by replying in writing to the Commission's preliminary findings. In parallel, the European Board for Digital Services will be consulted. If the Commission's preliminary views were to be ultimately confirmed, the Commission would adopt a non-compliance decision finding that Temu is in breach of Article 34 of the DSA. Such a decision could entail fines of up to 6% of the total worldwide annual turnover of the provider and order the provider to take measures to address the breach. A non-compliance decision may also trigger an enhanced supervision period to ensure compliance with the measures the provider intends to take to remedy the breach.”

The European Commission has published a list of companies that have already signed the GPAI Code of Practice up to date.

Why does this matter? “The Code of Practice helps industry comply with the AI Act legal obligations on safety, transparency and copyright of general-purpose AI models. The General-Purpose AI (GPAI) Code of Practice is a voluntary tool […].” As Euractive also points it out, “several major AI developers had already made their approach to the Code public in the weeks after publication. But, per the (updatable) list published today, Amazon is also signing, along with a number of smaller AI providers. Notably absent at the time of writing are any Chinese AI companies, such as Alibaba, Baidu or Deepseek.” Meta expressly refused to sign the Code of Practice and until now, Apple has not signed the document. xAI signed only the third Chapter of the Code (Chapter on Safety and Security).

B) Guidelines, opinions & more

The European Commission presented a template for General-Purpose AI model providers to summarise the data used to train their model.

Why does this matter? “This template is a simple, uniform and effective manner for GPAI providers to increase transparency in line with the AI Act, including making such a summary publicly available. […] The public summary will provide a comprehensive overview of the data used to train a model, list main data collections and explain other sources used. This template will also assist parties with legitimate interests, such as copyright holders, in exercising their rights under Union law.”

The European Data Protection Board (EDPB) adopted a Statement on the European Commission’s Recommendation on draft non-binding model contractual terms on data sharing under the Data Act.

Why does this matter? “[…] Considering the close link between data access and sharing under the Data Act and the GDPR, insofar as personal data is concerned, the EDPB has reviewed the MCTs [the draft non-binding model contractual terms on data sharing] and wishes to provide a number of general comments to the Commission. […]”

The Irish Data Protection Commission launched an Adult Safeguarding Toolkit to protect vulnerable adults' data.

Why does this matter? “The Data Protection Commission (DPC) has today launched a new Adult Safeguarding Toolkit to provide organisations and individuals with guidance and resources to protect the personal data of vulnerable adults. At-risk/vulnerable adults describes a person who, by reason of their physical or mental condition or other particular personal characteristics or family or life circumstance (whether permanent or otherwise), is in a vulnerable situation and/or at risk of harm and needs support to protect themselves from harm at a particular time. While not an exhaustive list, this can include individuals suffering from physical or mental conditions (such as cognitive impairment, dementia, acquired brain injury), children with additional needs reaching the age of majority, individuals subject to domestic violence or coercive control, individuals who find themselves homeless, individuals who are subject to financial abuse and individuals who have been trafficked. This initiative aims to ensure compliance with data protection legislation and promote best practices in safeguarding sensitive information. The toolkit offers comprehensive guidance on how to collect, use, store, and share data related to vulnerable adults, while adhering to the principles of the General Data Protection Regulation (GDPR) and the Data Protection Act 2018. It includes practical advice, templates, and examples to help organisations implement effective data protection measures.”

The Dutch Data Protection Authority (Autoriteit Persoonsgegevens) published guidelines for organizations to maintain meaningful human intervention in automated decision-making.

Why does this matter? “Human intervention ensures that a decision is made carefully and aims to prevent, for example, people from being excluded or discriminated against, unintentionally or otherwise, by the outcome of an algorithm. This human intervention should not merely have a symbolic function; it should contribute meaningfully to decision-making.”

The UK Data Protection Authority (ICO) published guidance explaining the data protection and privacy considerations to take into account regarding the use of profiling in online trust and safety processes.

Why does this matter? “This guidance applies to any organisations that carry out profiling […] as part of their trust and safety processes. It is aimed at user-to-user services who are using, or considering using, profiling to meet their obligations under the Online Safety Act 2023 (OSA). But it also applies to any organisations using, or considering using, these tools for broader trust and safety reasons.”

C) Publications, reports

The European Parliament commissioned a study on AI and Civil Liability.

Why does this matter? The study “critically analyses the EU’s evolving approach to regulating civil liability for artificial intelligence systems. In order to avoid regulatory fragmentation between Member States, the study advocates for a strict liability regime targeting high-risk systems, structured around a single responsible operator and grounded in legal certainty, efficiency and harmonisation.”

The European Parliament published a study on “Technological Aspects of Generative AI in the Context of Copyright”.

Why does this matter? “This in-depth analysis explains the statistical nature of generative AI and how training on copyright-protected data results in persistent functional dependencies with respect to the used data. It highlights the challenges of attribution and novelty detection in these high-dimensional models, emphasizing the limitations of current methodologies. The study provides technical recommendations for traceability and output assessment mechanisms. This study is commissioned by the European Parliament’s Policy Department for Justice, Civil Liberties and Institutional Affairs at the request of the Committee on Legal Affairs.”

The MIT AI Risk project published an AI Risk Mitigation Database.

Why does this matter? They identified and extracted mitigations from documents that proposed AI risk mitigations into an AI Risk Mitigation Database. They used the mitigations to develop a draft AI Risk Mitigation Taxonomy. They extracted 831 mitigations from the 13 documents. The draft AI Risk Mitigation Taxonomy has 4 categories: “(i) Governance & Oversight Controls: Formal organizational structures and policy frameworks that establish human oversight mechanisms and decision protocols. (ii) Technical & Security Controls: Technical, physical, and engineering safeguards that secure AI systems and constrain model behaviors. (iii) Operational Process Controls: Processes and management frameworks governing AI system deployment, usage, monitoring, incident handling, and validation. (iv) Transparency & Accountability Controls: Formal disclosure practices and verification mechanisms that communicate AI system information and enable external scrutiny.”

The World Economic Forum published a report on “Rethinking Media Literacy: A New Ecosystem Model for Information Integrity”.

Why does this matter? “In an era marked by unprecedented speed, scale, and sophistication in the creation and dissemination of information, the ability to critically engage with that information has become essential for safeguarding public trust, democratic discourse and individual autonomy. Media and information literacy (MIL) stands as a foundational response to this challenge, empowering individuals to access, analyse, evaluate and responsibly produce media content across formats and platforms.”

The EU Agency for Cybersecurity (ENISA) published its report on “Telecom Security Incidents 2024”.

Why does this matter? “The 2024 annual summary contains reports of 188 incidents submitted by national authorities from 26 EU Member States and 2 European Free Trade Association (EFTA) countries. This is an increase of 20,5% over the 2023 with 156 incidents from 26 EU Member States and 1 EFTA country.”

Germany's Federal Office for Information Security (BSI) published a white paper on bias in AI. (The document is in German.)

Why does this matter? “This publication is intended to provide developers, providers and operators of AI systems with a first introduction to the bias topic. Bias species have a high diversity and can be found in different phases of the AI system lifecycle. A selection of the most relevant types of bias as well as an assignment to the phase of the lifecycle in which they occur is presented. The detection of bias is possible both in data and in AI models that have already been trained. A selection of qualitative and quantitative detection options is presented. The whitepaper then gives an overview of the methods that are used to prevent bias in an AI system. These are divided into pre-, in- and post-processing methods, depending on the time at which they are can be applied. Finally, the whitepapare examines the interaction between bias and cybersecurity.”

Data, Technology & Company news

According to a TechCrunch report, “security researchers at Google and Microsoft say they have evidence that hackers backed by China are exploiting a zero-day bug in Microsoft SharePoint.” The European Commission, the EU Agency for Cybersecurity (ENISA), the Cybersecurity Service for the Union institutions, bodies, offices and agencies (CERT-EU), and the network of the EU CSIRTs, are also closely following the active exploitation of vulnerabilities in on-premises SharePoint Servers.

Why does this matter? “Dozens of organizations have already been hacked, including across the government sector. The bug is regarded as a zero-day because the vendor — Microsoft, in this case — had no time to issue a patch before it was actively exploited. Microsoft has since rolled out patches for all affected versions of SharePoint, but security researchers have warned that customers running self-hosted versions of SharePoint should assume they have already been compromised. […] This is the latest hacking campaign linked to China in recent years. Hackers backed by China were accused of targeting self-hosted Microsoft Exchange email servers in 2021 as part of a mass-hacking campaign. According to a recent Justice Department indictment accusing two Chinese hackers of masterminding the breaches, the so-called “Hafnium” hacks compromised contact information and private mailboxes from more than 60,000 affected servers.”

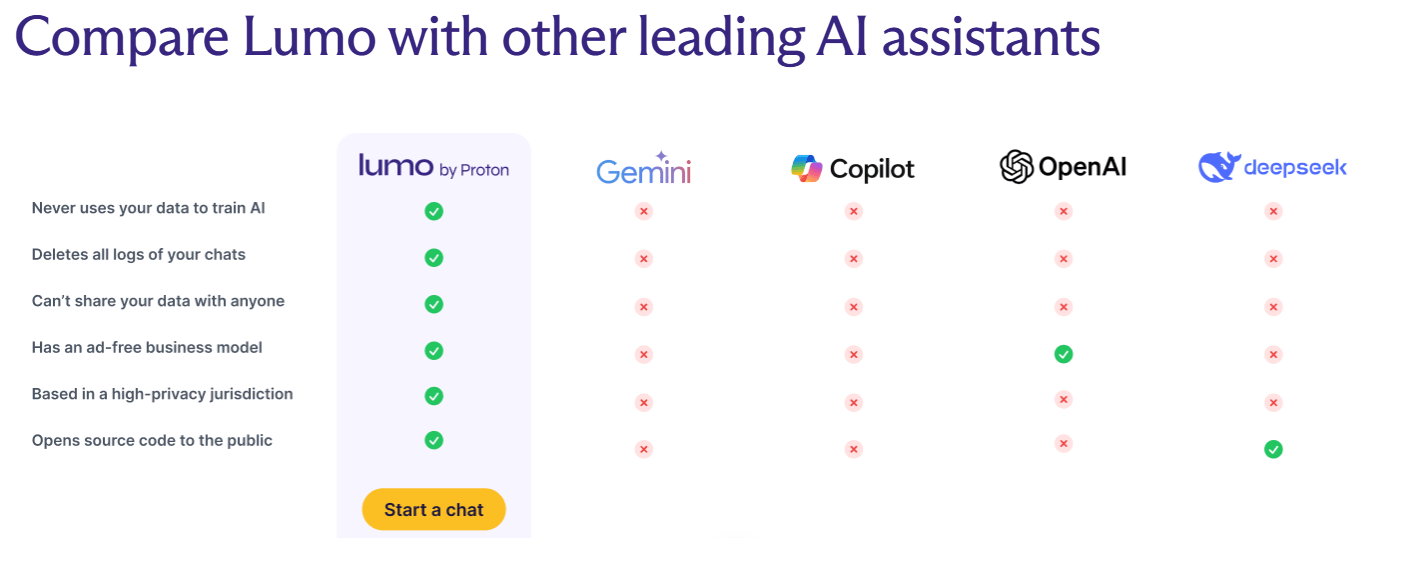

Proton released its privacy-first AI assistant, Lumo.

Why does this matter? “Like all Proton services, Lumo was built around privacy, security, and transparency. In contrast to other companies working on AI, Proton doesn’t make money by selling your data. We’re supported exclusively by our community, not advertisers, and our base in privacy-friendly Europe gives us the legal protections to ensure that we can live up to our promises. Most important, we are owned by the nonprofit Proton Foundation, whose sole mission is to advance privacy and freedom.”

(Source: Proton, https://proton.me/blog/lumo-ai)

OpenAI introduced ChatGPT agent.

Why does this matter? “At the core of this new capability is a unified agentic system. It brings together three strengths of earlier breakthroughs: Operator’s ability to interact with websites, deep research’s skill in synthesizing information, and ChatGPT’s intelligence and conversational fluency. ChatGPT carries out these tasks using its own virtual computer, fluidly shifting between reasoning and action to handle complex workflows from start to finish, all based on your instructions. Most importantly, you’re always in control. ChatGPT requests permission before taking actions of consequence, and you can easily interrupt, take over the browser, or stop tasks at any point.”

Microsoft introduced agentic browsing by enabling Copilot Mode in Edge.

Why does this matter? “When you open a new tab in Edge with Copilot Mode on, you’ll see a clean, streamlined page with a single input box that brings together chat, search and web navigation. Copilot understands your intent and helps you get started faster. Copilot Mode also sees the full picture across your open tabs, and you can even instruct it to handle some tasks. Turn your browser into a tool that helps you compare, decide and get things done with ease. Over time, we will continue to improve and add features to Copilot Mode. And if at any time you prefer today’s classic experience on Edge, you can change this in your browser settings.”

OpeanAI introduced study mode in ChatGPT, “a learning experience that helps you work through problems step by step instead of just getting an answer. Starting today, it’s available to logged in users on Free, Plus, Pro, Team, with availability in ChatGPT Edu coming in the next few weeks.”

Why does this matter? “[…] study mode is powered by custom system instructions we’ve written in collaboration with teachers, scientists, and pedagogy experts to reflect a core set of behaviors that support deeper learning including: encouraging active participation, managing cognitive load, proactively developing metacognition and self reflection, fostering curiosity, and providing actionable and supportive feedback. These behaviors are based on longstanding research in learning science and shape how study mode responds to students.”

As Fortune reported, “a group of 40 AI researchers, including contributors from OpenAI, Google DeepMind, Meta, and Anthropic, are sounding the alarm on the growing opacity of advanced AI reasoning models.”

Why does this matter? “In a new paper, the authors urge developers to prioritize research into “chain-of-thought” (CoT) processes, which provide a rare window into how AI systems make decisions. They are warning that as models become more advanced, this visibility could vanish.”