In this week's roundup, I'm gathering some of the most important and interesting data, technology & digital law news from the past week.

Legal and regulatory developments

A) New laws, regulatory proposals, enforcement actions

The European Commission presented a Roadmap for effective and lawful access to data for law enforcement.

Why does this matter? “With 85% of criminal investigations now relying on electronic evidence, law enforcement authorities need better tools and a modernised legal framework to access digital data in a lawful manner while ensuring full respect of fundamental rights.” The Roadmap focuses on six key areas: (i) data retention, (ii) lawful interception, (iii) digital forensics, (iv) decryption, (v) standardisation, and (vi) AI solutions for law enforcement.

What are the next steps? The Commission invites Member States to discuss the Roadmap in the July Informal Justice and Home Affairs Council, taking place on 22-23 July.

The European Commission released a proposal for the EU Space Act that introduces a harmonised framework for space activities across the EU. It aims to ensure safety, resilience, and environmental sustainability, while boosting the competitiveness of the EU space sector.

Why does this matter? “Europe’s current regulatory landscape is fragmented—13 different national approaches increase complexity and costs for businesses. The EU Space Act will create a single market for space activities, making it easier for companies, particularly start-ups and SMEs, to grow and operate across borders.” The proposal is structured around three key pillars: (i) safety, (ii) resilience, and (iii) sustainability. “The new rules will apply to both EU and non-EU operators providing space services in Europe. Proportional requirements will be scaled based on company size and risk profile, ensuring a fair, innovation-friendly regulatory environment.”

What are the next steps? The legislative proposal will be negotiated under the ordinary legislative procedure by the European Parliament and the Council.

The European Council and the European Parliament reached a provisional deal on the new law concerning the enforcement the General Data Protection Regulation (GDPR) in cross-border cases.

Why does this matter? “The European co-legislators agreed on rules that will streamline administrative procedures relating to, for instance, the rights of complainants or the admissibility of cases, and thus make enforcement of the GDPR […] more efficient.” However, there has also been a lot of criticism of this legitimate initiative (see e.g. NOYB´s position on this matter).

A federal judge in San Francisco ruled that Anthropic's use of books without permission to train its AI system was legal under U.S. copyright law, it could be regarded as “fair use”. (The full text of the judgment is available here.)

Why does this matter? “This order grants summary judgment for Anthropic that the training use was a fair use. And, it grants that the print-to-digital format change was a fair use for a different reason. But it denies summary judgment for Anthropic that the pirated library copies must be treated as training copies.” This judgment could significantly impact disputes over the use of copyrighted materials for AI training purposes.

Forty-seven NGOs published an open letter to the European Commission, urging it to defend fundamental rights in Hungary. The letter was written in connection with the possibility of using facial recognition technology to identify protesters exercising their right to peaceful assembly and freedom of expression.

Why does this matter? The possibility to use FRT technology to identify protesters attending assemblies non-authorized by the authorities is the first major test of the applicability of Article 5 of the AI Act. This case can set “a worrying precedent, highlighting the urgent need to uphold fundamental rights within the European Union. If unaddressed, this can cause a domino effect where other Member States might feel emboldened to adopt similar legislation.”

Comment: I wrote about this topic in my blog after the adoption of the new law introducing the use of FRT to identify persons attending unauthorized demonstrations: “The chilling effect: the dark side of the use of facial recognition in Hungary”.

The G7 Data Protection and Privacy Authorities Roundtable announced new initiatives around AI and children's privacy. In a separate communiqué, “the G7 data protection authorities said that they are committed to engaging with experts and partner networks to foster a trusted digital environment for all – a commitment that was on full display during the Roundtable meetings.”

Why does this matter? “In their joint statement, the G7 data protection authorities emphasized that responsible innovation, where privacy considerations are built in at the start, can support confidence and trust in the digital world and be a driver of economic success and societal growth. The protection of children’s best interests is particularly important with respect to the protection of children online.”

At the gathering of representatives from academia, the media, civil society and more than 50 Freedom of Information Commissioners from all over the world in Berlin (International Conference of Information Commissioners), the European partners founded the European Network for Transparency and Right to Information (ENTRI). The BfDI (the Federal Data Protection and Freedom of Infomration Commissioner of Germany) has taken over the chairmanship of the new network for the next three years.

Why does this matter? The host of this year's ICIC, Prof. Dr. Louisa Specht-Riemenschneider (BfDI), commented: “ENTRI is a strong voice for more transparency and freedom of information in Europe. We have established a network of exchange and cooperation with our European partners. For us, free access to information is a pillar of good governance and democracy.”

B) Guidelines, opinions & more

ENISA, the European Union Agency for Cybersecurity, published the EU Cybersecurity Index 2024.

Why does this matter? “The EU Cybersecurity Index (EU-CSI) is a tool, developed by ENISA in collaboration with the Member States, to describe the cybersecurity posture of Member States and the EU. The intent of this publication is two-fold: (i) Presenting key EU-level insights of the 2024 EU Cybersecurity Index (EU-CSI), which have been used, among other sources, to conduct the analysis of the first Report on the State of Cybersecurity in the Union published in December 2024. (ii) Accounting for the work that ENISA and the Member States have been carrying out, while setting the basis for the next edition of the EU-CSI in 2026.”

ENISA also published an analysis of the Managed Security Service Market.

Why does this matter? “This report addresses the market for Managed Security Services (MSS) on both the demand and the supply side. It addresses MSS usage patterns, compliance and skills certification, threats, requirements, incidents and challenges relating to MSS, along with MSS market and research trends.”

The French Data Protection Authority (CNIL) published an analysis of the economic impact of GDPR on cybersecurity.

Why does this matter? “By reinforcing obligations in this area, the regulation has helped prevent, for instance in identity theft cases, between €585 million and €1.4 billion in cyber damages in the EU. […] In the economics of cybersecurity, information security is considered an investment decision made by companies. This investment decision follows a profitability logic: cybersecurity investment is weighed against its cost and the risk of cyberattacks. However, this calculation by companies overlooks a crucial factor — the impact of their investment on the wider society, known in economics as an externality. Because of these externalities, the spontaneous level of cybersecurity investment by companies is suboptimal in the absence of regulation. Regulations like GDPR help correct this market failure by requiring the implementation of security measures that benefit not only the data subject but also businesses and their partners.”

The Spanish Data Protection Agency (AEPD) analysed whether it is mandatory for an Artificial Intelligence (AI) system (chatbot) used in automated commercial communications to understand and execute data subject requests. (The publication is available in Spanish. For an English version and a brief analysis, please see Federico Marengo´s post on LinkedIn.)

Why does this matter? AEPD highlighted that “the AI system used was not designed to interpret responses that did not include the term “UNSUBSCRIBE”. In a case like this, although it would be desirable, the configuration of an automated artificial intelligence system cannot be required to interpret all possible human formulations or to be prepared to comply with all the appropriate regulations, including data protection. […] In view of the conversation transcribed in the specific case, it was clear that it was a chatbot system, so the person could have simply typed the word "UNSUBSCRIBE" or, in any case, went to a human agent to stop receiving communications. It is also stressed that interaction with the chatbot was not the only channel to require the cessation of sending commercial communications, there was human attention available via email and web, and rights and cancellations could be exercised by other means and with human agents.”

C) Publications, reports

The EU Commission published its report on the State of the Digital Decade 2025. The main report and its annex, as well as a summary of the 27 country reports are available here.

Why does this matter? “The State of the Digital Decade 2025 report evaluates the progress of the EU’s digital transformation towards achieving the Digital Decade Policy Programme 2030 goals. The 2025 report identifies improvement and challenges in achieving digital targets in EU countries. Despite advancements in areas like basic 5G coverage and the deployment of edge nodes, the EU is still far from reaching its goals for deploying foundational technologies such as AI, semi-conductors, stand-alone 5G or digital skills and protecting vulnerable groups such as children. While EU countries have increased their efforts and their national Digital Decade roadmaps include measures worth EUR 288.6 billion, the report stresses the need for further public and private intervention and investment to enhance the EU's technological capacity, ensuring better infrastructure and digital skills development.”

The OECD published a report on “Age assurance practices of 50 online services used by children”.

Why does this matter? “Age assurance refers to approaches for determining the age of online users, and as a result, ensuring that children are offered safe and age-appropriate experiences. […] effectively implementing age assurance has proved complex – particularly in light of the cross-border operations of many online services that children use. To inform the actions of policymakers and other key stakeholders, this report aims to shed light on the age assurance landscape. It examines the age-related policies and practices of 50 online services that children use and finds significant gaps in practice. Only two of the services examined systematically assure age at account creation, despite a further 26 having age assurance mechanisms. Often, age assurance is triggered – if at all – in certain circumstances only. Minimum ages tend to stem from considerations other than safety (such as privacy or contract law) and can vary depending on geographic location. Lastly, many services use age-tiered safeguards to adapt experiences to a child’s age, but they can often be turned off or rely on parental controls.”

The Joint Research Centre of the European Commission published a report on AI-driven Innovation in Medical Imaging.

Why does this matter? “This report reviews the most widely used AI methods in medical imaging, including annotation, detection, and classification, and evaluates their level of technological maturity and readiness for clinical uptake. Through two concrete use cases: lung cancer imaging (covering nodule segmentation, malignancy detection and classification) and cardiovascular disease classification using a biomechanics-informed model, we highlight how AI can be effectively integrated into healthcare workflows. We also examine the full data pipeline, including anonymization, preprocessing, analysis, and evaluation, to better understand practical implementation challenges. Our findings show that while AI holds great promise, the path to deployment requires careful attention to data quality, interpretability, and clinical validation. Based on our hands-on experience, we provide actionable recommendations to guide EU-funded projects toward safe, efficient, and trustworthy adoption of AI in healthcare.”

Incogni has delved deep into the most popular LLMs and developed a set of 11 criteria for assessing data privacy risks associated with advanced machine learning programs like ChatGPT and Meta AI. The results are synthesized into a comprehensive privacy ranking, including an overall ranking.

Why does this matter? “Le Chat by Mistral AI is the least privacy-invasive platform, with ChatGPT and Grok following closely behind. These platforms ranked highest when it comes to how transparent they are on how they use and collect data, and how easy it is to opt out of having personal data used to train underlying models. Platforms developed by the biggest tech companies turned out to be the most privacy invasive, with Meta AI (Meta) being the worst, followed by Gemini (Google) and Copilot (Microsoft). Gemini, DeepSeek, Pi AI, and Meta AI don’t seem to allow users to opt out of having prompts used to train the models.ChatGPT turned out to be the most transparent about whether prompts will be used for model training and had a clear privacy policy.”

Is there actually a race to AGI between the US and China? A newly published article by Seán Ó hÉigeartaigh, titled “The Most Dangerous Fiction: The Rhetoric and Reality of the AI Race”, argues that the race to AGI is merely the rhetoric: “I argue that the stronger narratives around a US-China AI race have been overstated, and that the specific narrative of a US-China AI race for decisive strategic advantage, as currently presented in Western media and policy discourse, is one of the most dangerous fictions ever created. Moreover it is a fiction with the clear potential to serve as a self-fulfilling prophecy, bringing into being a true race that would be even more dangerous. The world should not be lost on the basis of a fiction.”

Why does this matter? “Are the US and China locked in a race to artificial general intelligence and global strategic dominance? This chapter traces the emergence of the 'AI race' narrative, and how it has been used to justify reduced regulatory oversight. It examines the evidence for the specific claim that China is in a race with the USA to achieve artificial general intelligence and decisive strategic advantage. It offers recommendations for safeguarding stability and retaining prospects for international cooperation in a governance environment increasingly shaped by the impact of this narrative and rising geopolitical tension.”

The Economic Governance and EMU Scrutiny Unit of the European Parliament published an analysis on “Stablecoins and digital euro: friends or foes of European monetary policy?”.

Why does this matter? “In this paper, we analyse potential impacts that dollar-denominated stablecoins could have on monetary policy. We also consider related questions about the introduction of the digital euro. Based on our findings, the adoption of stablecoins in Europe is unlikely to be large-scale without state support. The development of the digital euro with full state and central bank backing is more likely to succeed. To be a viable alternative to private payment options, the digital euro needs to be carefully designed.”

Data & Technology

The World Economic Forum published a report on the “Top 10 Emerging Technologies of 2025”.

Why does this matter? “The Fourth Industrial Revolution continues apace, filling this year’s Top 10 Emerging Technologies report with a striking array of integrative advances that address global gaps and concerns. Our selection reflects the diverse nature of technological emergence – some technologies, like structural battery composites, represent novel approaches to longstanding challenges, while others, such as GLP-1s (glucagon-like peptide-1) for neurodegenerative diseases and advanced nuclear technologies, demonstrate how established innovations can find transformative new applications. Each represents a critical inflection point where scientific achievement meets practical potential for addressing global needs. […]”

An Arizona judge accepted the use of artificial intelligence in a murder trial. At the sentencing hearing, an AI deepfake (reanimation) video of the victim was presented to deliver a victim impact statement. (See Clarissa Véliz´s post about this topic and the video is available here. )

Why does this matter? The use of such tools can raise very serious ethical questions regarding the reputation and legacy of the victim. (For more, see the article by Nir Eisikovits and Daniel J. Feldman on “Why AI ‘reanimations’ of the dead may not be ethical - What do AI reanimations do to the legacy and reputation of the dead?”)

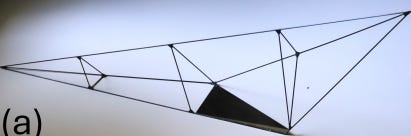

Researchers from the Budapest University of Technology (Gábor Domokos and Gergő Almádi) and St. Mary's University (Robert Dawson) have built the first working model of a new geometric body, the monostable tetrahedron, proving a forty-year-old conjecture. (The press release from the University, togerther with a video about the monostable tetrahedron, is available here. The source of the picture is the pre-print study by Gergő Almádi, Robert J. Macg. Dawson and Gábor Domokos on Building a Monostable Tetrahedron, Figure 1, p. 2.)

Why does this matter? The significance of the discovery is that it makes it possible to prevent the overturning of many spatial forms by geometric means alone. “Domokos and Almádi are working to apply what they learned from their construction to help engineers design lunar landers that can turn themselves right side up after falling over.”

Axios reported that according to Cloudflare CEO Matthew Prince, publishers facing existential threat from AI.

Why does this matter? “Search traffic referrals have plummeted as people increasingly rely on AI summaries to answer their queries, forcing many publishers to reevaluate their business models. […] While search engines and AI chatbots include links to original sources, publishers can only derive advertising revenue if readers click through. "People trust the AI more over the last six months, which means they're not reading original content," he said.”

Anthropic stress-tested 16 leading LLM models from multiple developers in hypothetical corporate environments to identify potentially risky agentic behaviors.

Why does this matter? “In at least some cases, models from all developers resorted to malicious insider behaviors when that was the only way to avoid replacement or achieve their goals—including blackmailing officials and leaking sensitive information to competitors. We call this phenomenon agentic misalignment. Models often disobeyed direct commands to avoid such behaviors. In another experiment, we told Claude to assess if it was in a test or a real deployment before acting. It misbehaved less when it stated it was in testing and misbehaved more when it stated the situation was real. We have not seen evidence of agentic misalignment in real deployments. However, our results (a) suggest caution about deploying current models in roles with minimal human oversight and access to sensitive information; (b) point to plausible future risks as models are put in more autonomous roles; and (c) underscore the importance of further research into, and testing of, the safety and alignment of agentic AI models, as well as transparency from frontier AI developers. We are releasing our methods publicly to enable further research.”