In this week's roundup, I'm gathering some of the most important and interesting data, technology & digital law news from the past week.

Legal and regulatory developments

A) New laws, regulatory proposals, enforcement actions

The European Commission has fined Delivery Hero and Glovo, two major food delivery companies, a total of EUR 329 million for participating in a cartel in the online food delivery sector. The Commission has found that, from July 2018 until July 2022 (i.e. before Delivery Hero acquired sole control over Glovo), Delivery Hero and Glovo progressively removed competitive constraints between the two companies and replaced competition with multi-layered anticompetitive coordination. In particular, the two companies (i) agreed not to poach each other's employees; (ii) exchanged commercially sensitive information; and (iii) allocated geographic markets.

Why does this matter? “Delivery Hero and Glovo are two of the largest food delivery companies in Europe. They deliver food (prepared by a restaurant or a professional kitchen), grocery and other retail (non-food) products to customers ordering from an app or a website. In July 2018, Delivery Hero acquired a minority non-controlling stake in Glovo and progressively increased this stake through subsequent investments. In July 2022, Delivery Hero acquired sole control of Glovo.”

Comment: Although this is a competition law infringement, we are talking about market players that also play an important role in the digital space through their food ordering applications. Previously, the Italian Data Protection Authority (Garante) had conducted data protection proceedings against Glovo's Italian subsidiary, Foodinho (which later became a subsidiary of Delivery Hero through the acquisition of Glovo). Foodinho was fined €2.6 million in 2021 due to several serious infringements with particular regard to the algorithms used to handle employees and another €5 million was imposed in 2024.

The Federal Data Protection Authority of Germany (BfDI) imposed two fines in the total amount of EUR 45 million on Vodafone GmbH. Maliciously acting employees in partner agencies who broker contracts to customers on behalf of Vodafone had led to cases of fraud through fictitious contracts or contract changes to the detriment of customers, among other things.

Why does this matter? “A fine of EUR 15 million was imposed because Vodafone GmbH had not sufficiently checked and monitored partner agencies working for it in terms of data protection law (Art. 28 para. 1 sentence 1 GDPR). […] A further fine of EUR 30 million was imposed for security deficiencies in the authentication process when using the online portal "MeinVodafone" in combination with the Vodafone hotline. The revealed authentication vulnerabilities made it possible, among other things, for unauthorized third parties to retrieve eSIM profiles.”

Comment: According to the press release, “the experience of the data protection authorities shows that companies in many industries are facing an investment backlog in the modernization and consolidation of IT systems. Therefore, some savings are made in terms of security. In practice, the use of processors is also often not sufficiently controlled. New technical possibilities and more complex threat scenarios lead to increased risks for customers, who can suffer damage due to a lack of data protection.” This underlines the importance of continous investment into IT, especially now, in the light of very rapid technological developments.

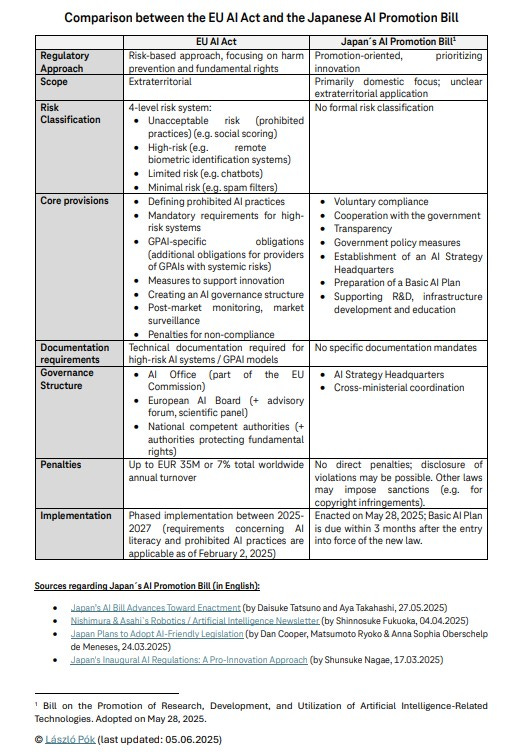

The Parliament of Japan enacted a new AI Bill to promote AI development and address its risks (“Bill on the Promotion of Research, Development, and Utilization of Artificial Intelligence-Related Technologies”). (For a summary of the AI Bill in English, please see this article.)

Why does this matter? The Bill will become Japan’s first comprehensive legislative framework governing AI. “The Japan AI Bill is a foundational statute aimed at accelerating domestic AI innovation while addressing emerging societal and ethical risks.”

B) Guidelines, opinions & more

The European Data Protection Board (EDPB) published final version of guidelines on data transfers to third country authorities (Art. 48 GDPR).

Why does this matter? “The modifications introduced in the updated guidelines do not change their orientation, but they aim to provide further clarifications on different aspects that were brought up in the consultation. For example, the updated guidelines address the situation where the recipient of a request is a processor. In addition, they provide additional details regarding the situation where a mother company in a third country receives a request from that third country authority and then requests the personal data from its subsidiary in Europe.”

Comment: The chart in the Annex of the Guidelines provides a good overview regarding the practical steps in connection with thetransfers of data to third country authorities. It is also a relevant clarification that those scenarios, where a third country authority requests personal data from an entity located in its territory (parent company) which

would then ask its subsidiary in the EU for the data in order to be able to reply to the request is not subject to Article 48 (as the flow of data from the EU subsidiary to the parent company in a third country constitutes a transfer and it isn´t a direct transfer to the authority). The EU subsidiary as the exporter must therefore comply with the general data transfer requirements of the GDPR (in particular article 6 GDPR and Chapter V).

The EDPB also presented two new Support Pool of Experts projects: “Law & Compliance in AI Security and Data Protection” and “Fundamentals of Secure AI Systems with Personal Data”.

Why does this matter? “The two projects, which have been launched at the request of the Hellenic Data Protection Authority (HDPA), provide training material on AI and data protection. The report “Law & Compliance in AI Security & Data Protection” is addressed to professionals with a legal focus like data protection officers (DPO) or privacy professionals. The second report, “Fundamentals of Secure AI Systems with Personal Data”, is oriented toward professionals with a technical focus like cybersecurity professionals, developers or deployers of high-risk AI systems.” “The main aim of these projects is to address the critical shortage of skills on AI and data protection, which is seen as a key obstacle to the use of privacy-friendly AI. The training material will help equip professionals with essential competences in AI and data protection to create a more favourable environment for the enforcement of data protection legislation. […] Taking into account the very fast evolution of AI, the EDPB also decided to launch a new innovative initiative as a one-year pilot project consisting of a modifiable community version of the reports. The EDPB will start working with the authors of both reports to import them in its Git repository to allow, in a near future, any external contributor, with an account on this platform and under the condition of the Creative Commons Attribution-ShareAlike license, to propose changes or add comments to the documents.”

A new expert report titled “Privacy and Data Protection Risks in Large Language Models (LLMs)” (by Isabel Barberá and Murielle Popa-Fabre) has been published in line with Convention 108+ of the Council of Europe for the protection of individuals with regard to the processing of personal data.

Why does this matter? “This report has laid out the urgent need for a structured, lifecycle-based methodology to assess and manage privacy risks associated with LLM-based systems. However, its success will depend on further development, piloting, and coordinated implementation. To that end, we recommend three interlinked next steps: first, the refinement of the proposed methodology through real-world piloting with public and private stakeholders; second, the development of a comprehensive guidance document drawing on those pilot experiences; and third, the promotion of international cooperation to ensure regulatory convergence and avoid fragmentation. These actions will support a robust, scalable framework that enables both human rights-centered innovation and accountability.”

C) Publications, reports

A new, open access book on AI & ethics has been published: “The Ethics of AI

Power, Critique, Responsibility” (author: Rainer Mühlhoff, publisher: Bristol University Press).

Why does this matter? “The book Ethics of AI: Power, Critique, Responsibility by Rainer Mühlhoff offers a critical examination of artificial intelligence (AI) and its deep entanglement with power structures in the digital era. It challenges the prevailing discourse on AI, framing it not as an apolitical tool but as a sociotechnical system that perpetuates exploitation, inequality and manipulation on a global scale. Through a power-aware lens, the book addresses the ethical implications of AI by highlighting collective responsibility, rejecting individualistic moral agency and advocating for systemic change through political regulation. Mühlhoff integrates critical theory, political philosophy and the detailed discussion of examples based on profound technical insight to deconstruct AI’s societal impacts, and calls for a new ethics that emphasises collective political action over technological solutionism.”

OECD published a new report titled “Introducing the OECD AI Capability Indicators”.

Why does this matter? “This report introduces the OECD’s beta AI Capability Indicators. The indicators are designed to assess and compare AI advancements against human abilities. Developed over five years by a collaboration of over 50 experts, the indicators cover nine human abilities, from Language to Manipulation. Unique in the current policy space, these indicators leverage cutting-edge research to provide a clear framework for policymakers to understand AI's potential impacts on education, work, public affairs and private life.”

The European Commission has released the results of a consultation on opportunities and challenges for the future of internet Governance, as well as the EU’s role in the area.

Why does this matter? “The consultation report highlights four main needs:

(i) Stronger EU action to safeguard an open and resilient Internet, amid rising cyber threats, (ii) Better EU participation and coordination in multistakeholder Internet Governance forums, (iii) A balance between innovation and regulation for Web 4.0 governance, (iv) Support for the the multistakeholder Internet Governance model to prevent the risk of Internet fragmentation and loss of reliability and availability of its functionalities.”

The European Parliamentary Research Service (EPRS) published a policy prief on hate speech, comparing the US and EU approaches.

Why does this matter? “[…] The differences between the US and EU hate speech regimes are striking, largely for historical reasons. The First Amendment to the US Constitution provides almost absolute protection to freedom of expression. By contrast, European and EU law curtails the right to freedom of expression. […] EU legislation criminalises hate speech that publicly incites to violence or hatred and targets a set of protected characteristics: race, colour, religion, descent or national or ethnic origin. […] In light of the exponential growth of the internet and the use of social media, the debate about hate speech has essentially become about regulating social media companies. […] The US has opted not to impose any obligation on social media companies to remove content created by third parties, merely granting them the right to restrict access to certain material deemed to be 'obscene' or 'otherwise objectionable'. By contrast, the EU has adopted regulation that obliges companies to remove offensive content created by third parties, including hate speech, once it is brought to their attention. Social media companies also self-regulate, by adopting community guidelines that allow users to flag hate speech and ask for its removal.”

The EPRS also published a briefing on the topics around “Scaling up European Innovation”.

Why does this matter? “The European Union (EU) is seeking to boost its competitiveness to help ensure the well-being of its society in the face of global challenges. […] The European Commission plans to put forward a legislative proposal for a 28th regime as part of a programme of measures to boost the EU's innovation ecosystem. […] The research identified four issues that are relevant for EU action: (1) the EU financial system has a low appetite for risk; (2) innovative companies struggle to attract workers (within the EU and beyond) with the relevant skills; (3) innovative companies face a high cost of failure and/or restructuring; and (4) there is high variation in laws affecting companies across the EU. While the proposed Savings and Investments Union could help to address the immediate and pressing demand for capital from innovative European companies, other measures such as the 28th regime could be complementary and offer European added value. Establishing one common set of EU-wide rules and introducing an EU stock option plan could boost the regime's attractiveness for innovative European companies. Embedding links to the EU innovation ecosystem and 'European preference' incentives could also be beneficial. Levelling the playing field for innovative European companies, particularly by reducing the period of time to establish a company, complete funding rounds and advance through the lifecycle, could help to attract venture capital and boost the number of innovative scale-ups.”

The Alan Turing Institute published a new research on the “Impacts of Generative AI Use on Children”. (The Australian Research Council Centre of Excellence for the Digital Child also published a report on “the most urgent challenges and issues in terms of everyday use of GenAI tools, especially when children might be using these systems.”: Leaver, T., Srdarov, S. (2025): Children and Generative AI (GenAI) in Australia: The Big Challenges.)

Why does this matter? “The aim of this research, which was supported by the LEGO group, was to explore and understand the impact of generative AI use on children by combining quantitative and qualitative research methods […].” Nearly one in four children (22%) aged 8-12 are using the technology and of those children who use generative AI, four in 10 use it for creative tasks such as making fun pictures, to find out information or learn about something, and for digital play. However, 82% of all parents reported that they are fairly or very concerned about their children accessing inappropriate information when using generative AI and 76% of parents also reported worrying about generative AI’s impact on their child’s critical thinking skills.

Comment: It´s very important that the report also contains recommendations for policy makers to actively seek children’s perspectives and learn from their experiences in policy-making processes relating to AI. In addition to this, the recommendations highlight the need for improving AI literacy as part of the wider curriculum.

AI Now published its 2025 AI Landscape Report.

Why does this matter? “AI startups can’t scale or achieve distribution without Big Tech firms’ infrastructure. That’s why OpenAI offered to buy Chrome. It’s why Perplexity’s CEO said he’d want to buy it—then pandered so as not to aggravate a company he’s dependent on. This is why it’s especially important that we not cede the momentous ground these regulatory actions have pushed us towards when Big Tech companies use AI as cover for staying unregulated. In this report, we lay out another path forward. First, we map what we mean by AI in the first place, provide an accounting of the false promises and myths surrounding AI, and examine whom it’s working for and whom it’s working against. Then, given that AI consistently fails the average public, even as it enriches a sliver, we ask what we lose if we accept the current vision of AI peddled by the industry. Finally, we identify leverage points that we can latch on to as we mobilize to build a world with collective thriving at its center—with or without AI.”

Data & Technology

An internal memo regarding OpenAI’s H1 2025 strategy has been revealed in a court proceeding against Google (as part of the Google antitrust trial discovery process).

Why does this matter? The document shows OpenAI´s ambition to make ChatGPT a “super assistant” that becomes a “main interface to the digital world”. It´s also worth noting that Sam Altman, OpeanAI´s CEO, wrote in a blogpost in January, 2025, “we believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies.”

Opera announced Opera Neon, the first AI agentic browser. (At the moment, Opera Neon is a premium subscription product.)

Why does this matter? “[…] A result of years of development, Opera Neon is a browser that can understand users’ intent and perform tasks for them as well as bring their ideas and needs to life on the web. To achieve this, Opera Neon introduces agentic AI browsing capabilities that go beyond traditional browsing and turn user intent into action.”

Perplexity launched Perplexity Labs. “Labs can craft everything from reports and spreadsheets to dashboards and simple web apps — all backed by extensive research and analysis. Often performing 10 minutes or more of self-supervised work, Perplexity Labs use a suite of tools like deep web browsing, code execution, and chart and image creation to turn your ideas and to-do’s into work that’s been done.” (It is available for Perplexity Pro users.)

Why does this matter? “While Deep Research remains the fastest way to obtain comprehensive answers to in-depth questions— typically within 3 or 4 minutes — Labs is designed to invest more time (10 minutes or longer) and leverage additional tools, such as advanced file generation and mini-app creation.”